How The Baltimore Banner gained valuable audience insights from AI-powered content tagging

The Pulitzer-prize winning local news outlet developed an AI-powered story classification system, tested it with 1,400 Orioles articles and learned: Focus less on stats and more on people

Tour of the Baltimore Banner newsroom during the Hacks / Hackers reception

The Baltimore Banner has been in the spotlight lately. On May 5, it won a Pulitzer Prize for Local Reporting for its investigative series on Baltimore’s fentanyl crisis. One day later, still visibly in celebration mood, it hosted the opening night reception of the inaugural Hacks /Hackers summit in Baltimore. And yesterday, Nieman Lab chose the Banner as the featured example in a story about successful partnerships with The New York Times through their Local Investigations Fellowship. Not bad for a digital local news startup that is not even three years old.

Some background: The Baltimore Banner is a nonprofit media outlet that combines community-focused journalism with innovation. It is a role model for sustainable local journalism. It launched in June 2022 with $50 million initial funding, has a rapidly growing newsroom with 85 journalists, 55,000 paying subscribers. It generated more than $13 million in revenue in 2024 from three primary sources: subscriptions (45%), advertising (35%)k67y65 and philanthropy (22%). Their goal is to become self-sustaining within five years, and they are well on their way.

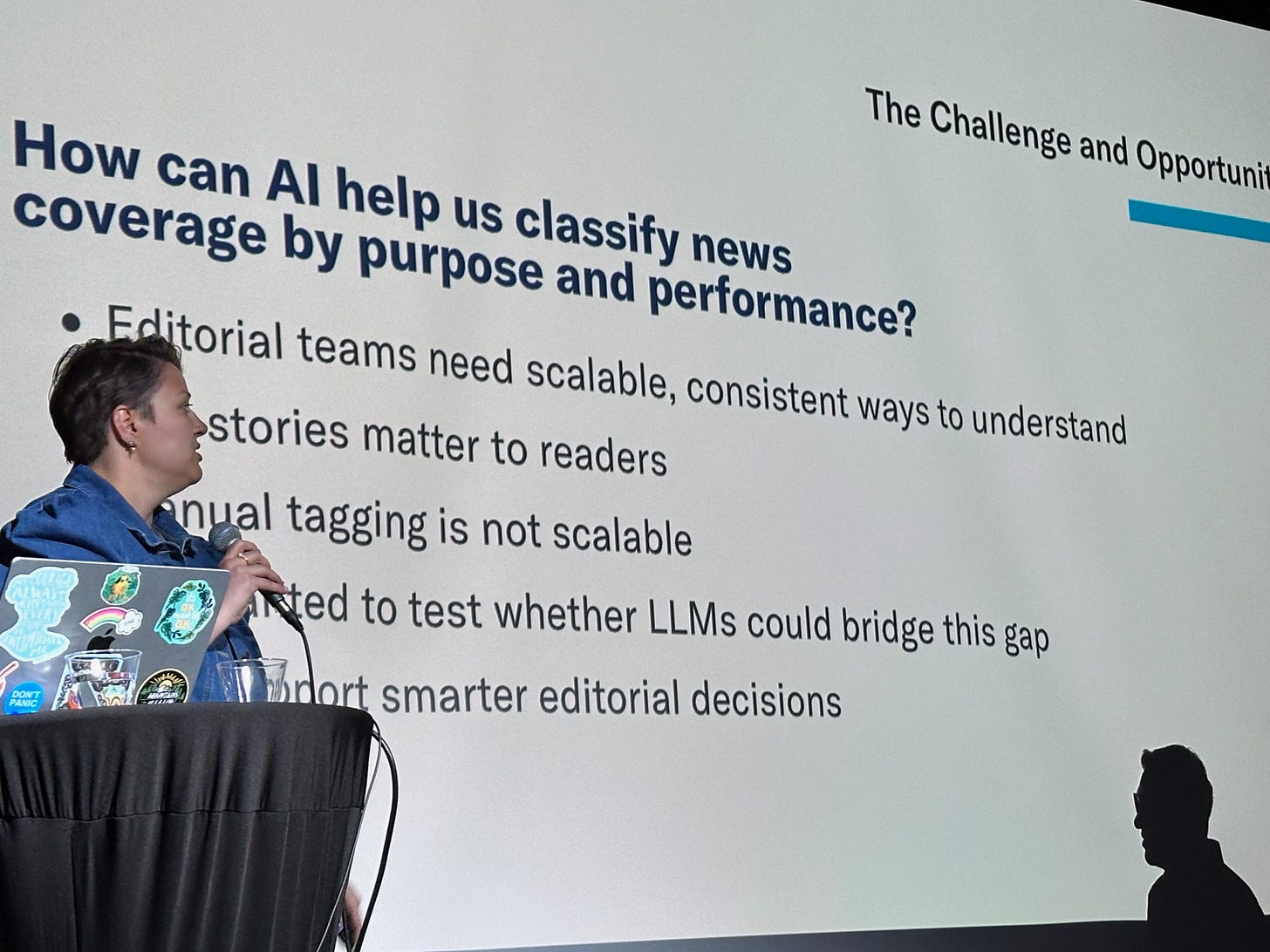

At the Hacks / Hackers summit, Ali Tajdar, Head of Insights and Analytics at the Baltimore Banner, and Emma Patti, Managing Editor for Digital, Audience and Visuals, showcased how the Banner built an AI-powered system for story classification to better understand what kind of content resonates with their audience and leads to subscription conversions. For this purpose, the news org needed to build a new tagging system from scratch because their existing CMS taxonomy was too limited for meaningful analysis. “We are a baby company, and when we started, we were maybe publishing two or five articles a day. We weren't exactly thinking of the scale where we are now, where we're publishing like 30 to 45 articles a day”, Patti explained.

Rather than manually re-tagging three years of content, the team designed a test to find out whether LLMs could classify news coverage reliably at scale, using 1,400 pieces of content about the Baltimore Major League baseball team, the Orioles.

This is how they did it:

Implementation Process

Their approach followed a five-step framework:

Developing an Editorial Taxonomy: They started with 15 classifications, refined down to 10 content categories including feature, profile, analysis, explainer, spot news, and general coverage.

Prompt Engineering: They tested eight different prompt iterations, refining definitions from simple two-sentence descriptions to comprehensive explanations that accounted for nuances between content types. Emma Patti noted that “we kept adding more and more clarity to these definitions to enhance the accuracy of the results." The final prompts included detailed role descriptions (asking the AI to act as a news editor) and specific output formatting requirements.

Model Testing: Using AWS Bedrock, they tested six different LLMs in parallel to find the most accurate model for their needs. (AWS Bedrock is a fully managed service from Amazon Web Services for building and scaling generative AI applications using a wide range of foundation models.) Claude Sonnet performed best with 84% accuracy compared to human editors. Other models like Amazon Titan and Mistral AI delivered significantly poorer results for this specific use case.

Human Verification: The editorial team manually classified over 1,000 articles to create a baseline for measuring AI accuracy.

Iteration and Scaling: They added context via RAG (explainer), refined their approach through feedback loops and eventually applied it to approximately 1,400 Orioles stories. This whole process took about three weeks and the cost was about $50 (roughly 4 cents per article).

Key technical insights for performance improvement:

Zero temperature parameter to ensure consistent results: "We noticed that every time we ran the LLM, it would give us different results. We had to change some of the hyper parameters of the LLM and set the temperature to zero, so it would just be far more consistent when it gave results”, Ali Tajdar said. (Explanation: With LLMs, zero temperature means highest possible consistency, whereas a higher temperature allows for more randomness and more creativity in the output. For taxonomy purposes, you need consistency.)

Additional classifications: Structuring output as CSV tables with primary classifications (main category) and secondary classifications (additional context). When accounting for both primary and secondary classifications, accuracy increased to 93%.

Including confidence scores for classifications: This helped rating the performance of different LLMs in general and in different content types.

Key learnings

The key lessons from this test center on the importance of careful model selection, the value of human expertise, the need for clear editorial guidelines, and the benefits of rapid, cost-effective analysis:

Model selection and testing are critical: The Banner learned that not all AI models perform equally for editorial tasks. They tested multiple large language models and found significant differences in accuracy, highlighting the need to evaluate and choose the right tool for the job.

Human expertise is indispensable: Even with advanced AI, the Banner relied on editors to define taxonomies, refine prompts, and provide a baseline for evaluating AI performance. This underscores that editorial judgment and oversight remain essential for trustworthy results.

Prompt engineering and clear guidelines improve results: The team found that refining prompts and providing detailed, nuanced definitions for content categories led to more accurate and consistent AI outputs. Investing time in prompt development pays off in better AI performance.

AI can reveal actionable business insights: By classifying content at scale, the Banner discovered a strong correlation between specific content types and subscription conversion rates. "It told us that we need to focus less on stats and more on the people behind the plate”, Emma Patti revealed. This insight led the editorial team to shift strategy, investing more in human-centric stories and focusing less on statistical or general coverage, as these were significantly less effective at driving subscriptions.

Implementation can be fast and cost-effective: The initial project was completed in three weeks. With the right infrastructure, the Banner can now process thousands of articles for a few hundred dollars. Ali Tajdar noted that the Banner’s entire archive of 15,000 articles can now be processed for about $600. This demonstrates that AI implementation can be remarkably cost-effective for news organizations and can be efficiently deployed even in resource-constrained newsrooms.

Next steps: The Baltimore Banner plans to expand its AI classification system beyond sports content to other sections and implement these classifications as a formal taxonomy in their CMS, using AI for classifying archive content while having humans tag new content going forward.